Technology deepfake It can create extremely realistic videos, images and sound and becomes increasingly popular, not just for fabulous fakes (Like George Clooney) or for efforts to influence public opinion, but also for theft of identity and all sorts of scams.

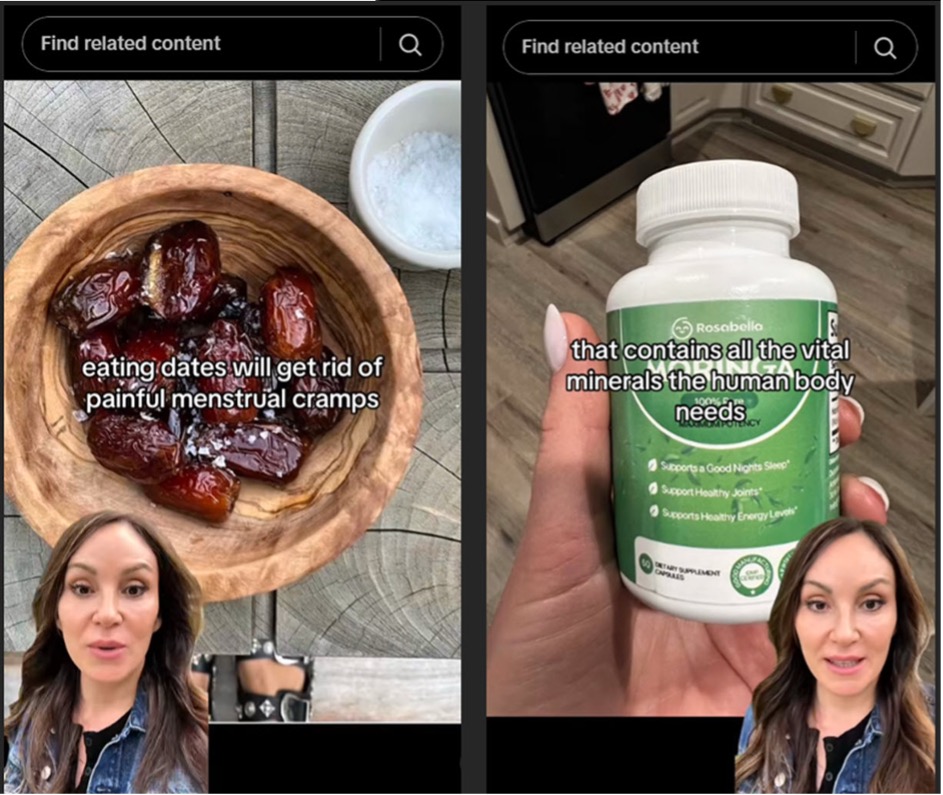

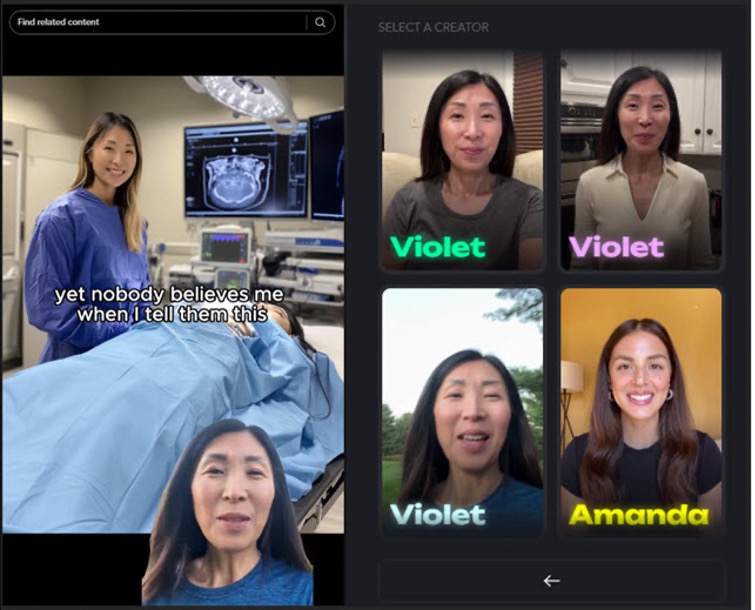

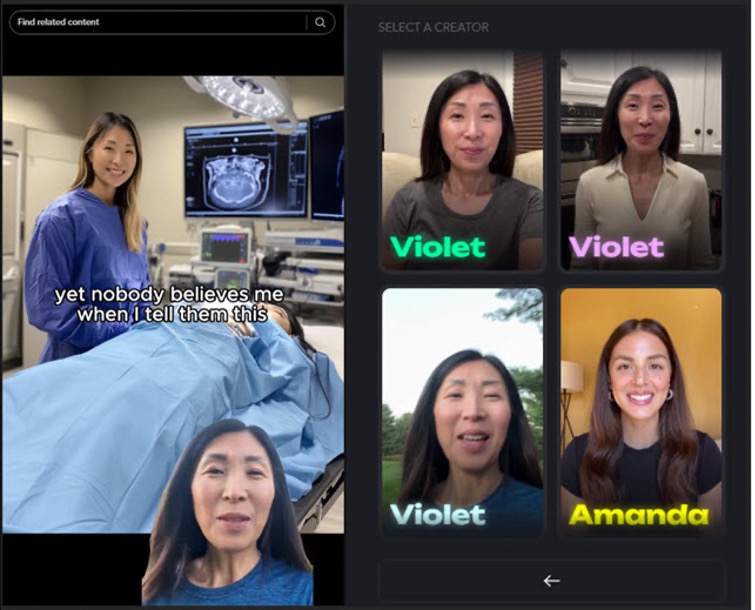

On social media platforms such as Tiktok and Instagram, the spread of deepfakes, along with their potential for damage, can be particularly alarming. ESET researchers in Latin America recently identified a campaign on these platforms, where Avatar created with artificial intelligence were presented as presented as gynecologists, dieticians and other health professionals, with the aim of promoting supplements and wellness products. These videos, often extremely well -made and convincing, appeared as medical advice, misleading unsuspecting users and persuading them to make controversial – and potentially dangerous – markets.

Each video follows a similar pattern: one speaking avatar, usually positioned at a corner of the screen, offers health or beauty tips. The proposals are presented in a style of scientific authority and are mainly based on “natural” treatments, indirectly guiding spectators to specific products for sale. Starring the role of the expert, these Deepfake Avatars take advantage of the public’s confidence in the medical function to boost sales – a regular and effective tactic.

In one case, a ‘doctor’ advertises a ‘natural extract’ as superior alternative to ozempicof the well -known drug used for weight loss. The video promises spectacular results and refers to an Amazon page, where the product is simply described as “relaxation drops” or “anti -edema aids” – without anything to do with the excessive benefits advertised.

Other videos are promoting non -approved drugs or false treatments for serious illnesses, and sometimes even use falsified images or videos of well -known doctors.

The use of artificial intelligence

These videos are created with artificial intelligence tools, which allow anyone to upload short material and turn it into a thoughtful avatar. Although this technology is an opportunity for influencers who wish to extend their presence on the internet, it can also be used to promote misleading claims and deceive the public. In other words, a marketing tool can easily be converted into a means of spreading false news.

‘We have identified more than 20 accounts in Tiktok and Instagram They use fake doctors to promote their products, “says Martina López and Tomáš Foltýn from the ESET World Safety Company team. “In one case, an account was played by a gynecologist with 13 years of experience, and in fact Avatar was found directly in the application library. Although such abuse violates the terms of use of most artificial intelligence tools, it highlights how easily they can be transformed into misinformation. “

The consequences can be very serious, as these deepfakes have the ability to erode confidence in online health tips, promoting harmful “treatments” and delaying proper medical care.

Holding the fake doctors in a distance

As artificial intelligence is becoming more and more accessible, finding imitation – such as deepfake videos – becomes increasingly difficult, even for people with technological training. However, there are some signs that can help you identify them:

- Incomplete lip synchronization with sound or facial expressions that look rigid and unnatural.

- Visual malfunctions, such as blurry edges or sudden changes in lighting, often betray the artificial nature of the video.

- Robotic or overly polished voice is also a sign of falsification.

- Check the account that posted the content: New profile with few followers or without a history cause suspicions.

- Beware of excessive claims, such as “miraculous treatments”, “guaranteed results” or “doctors hate this trick” – especially when not accompanied by reliable sources.

- Always verify claims through valid medical or scientific sources, avoid sharing suspicious content and report misleading videos on the platform where you find them.

As artificial intelligence tools evolve, the distinction between authentic and fake content will become increasingly difficult. This threat highlights the need for the development of both technological security mechanisms and to improve our collective digital alphabetism. These initiatives are critical for protecting us from misinformation and fraud, which may adversely affect our health and financial well -being.