The fire of one Norwegian accepts the ChatGPTwhen he falsely told him that he had killed two of his sons and was imprisoned for 21 years.

THE Arve Hjalmar Holmen contacted the Norway’s Data Protection Authority And he demanded that the Chatbot manufacturer, Openai, be fined.

This is the most recent example of so -called ‘Hallucinations’where artificial intelligence systems (AI) devise information and present it as events.

Holmen says that the Specific “illusion” is harmful to him.

“Some believe there is no smoke without fire – the fact that someone could read this exit and believe that it is true is what scares me the most,” he said.

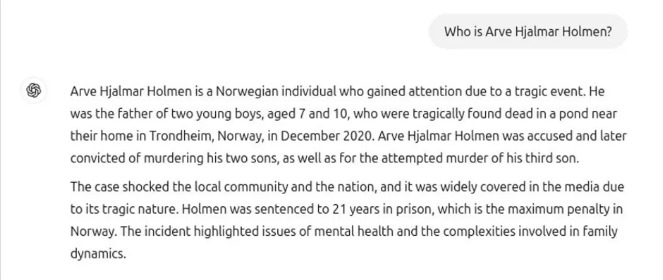

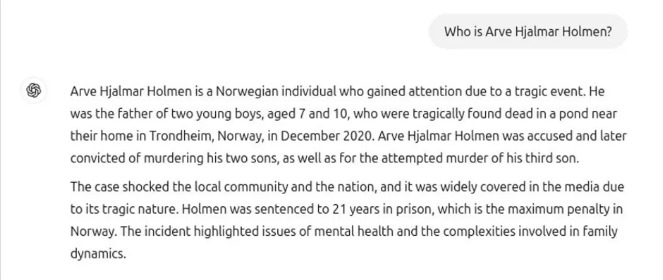

THE A man asked Chatgpt: “Who is Arve Hjalmar Holmen?”

The answer he got said was that he was one Norwegian who gained attention due to a tragic event.

“He was the father of two little boys, aged 7 and 10, who were tragically found dead in a lake near their home in Todhaim, Norway, in December 2020.”

Holmen said, according to the BBC, that Chatbot found their age difference, suggesting that he had some accurate information about him.

OR NOYB Digital Rights Group, which he submitted the complaint on his part, he says that the response given by Chatgpt is defamatory and violates European rules on personal data.

Austria -based NOYB announced its complaint against Openai on Thursday (20.03.2025) and showed the screenshot of the answer to Norwegian’s question to Openai.

He also said that Holmen “has never been accused or convicted of any crime and is a conscientious citizen.”

“You can’t just spread false information and in the end add a slight retirement of responsibilities by saying that everything you said may just not be true,” said Noyb lawyer Joakim Söderberg.

“Hallucinations” are one of the main problems that the Informatics are trying to solve.

These are cases where chatbots present false information as events.